When I started programming, about 20 years ago, I was writing Lua scripts in Notepad. I was scripting for my own OpenTibia Servers, a game that I used to play when I was 12. Then a friend of mine recommended that I try Notepad++. The difference was insane:

- I could have multiple files open simultaneously!

- Basic error highlighting in the editor!

- From no highlighting to highlighting and autocomplete!

It was shocking to me, and my productivity was boosted. I didn’t have to remember all the local functions in my files: I could just start typing the name, and it would autocomplete just fine.

Nowadays I still use Notepad++ for a few things when I’m on Windows. It’s blazingly fast, easy to set up, and handles large files wonderfully. For example, reading log files with Notepad++ is about as pleasant as it can be.

Later, when I started working on more complex software, my journey with feature-rich editors and IDEs also began. I discovered Emacs and Code::Blocks, so I got used to UI editors, SLIME, keyboard macros and other useful tools, like in-editor compiling (I just can't live without C-c C-c).

Then came Eclipse, IntelliJ IDEA, VSCode, Vim, Neovim, nano... and nowadays, my main tools are Emacs and IntelliJ IDEA.

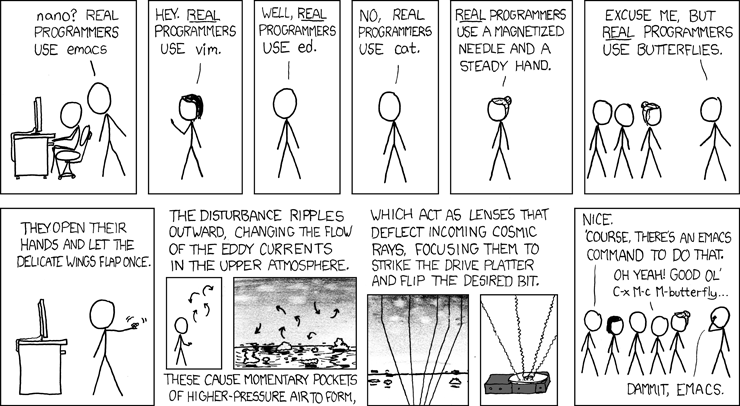

Every time I use Emacs, I have this XKCD in mind.

Every time I use Emacs, I have this XKCD in mind.

Why do I still use Emacs?

When I write software, I have to be in a specific mood. I need focus: and Emacs is brilliant for that. Emacs only shows what I need: my files, the text I’m working on, and extremely basic feedback whenever I start to derail. It keeps me in the loop. Emacs optimises what I’m doing right now by correcting my flow and removing everything I don’t need. It makes sure I have everything I need as soon as possible, so I don’t have to look anywhere else.

This isn’t about engagement: Emacs doesn’t need me to be there; it just makes sure it’s useful while I’m there.

In software development, we usually talk about two types of complexity:

Accidental Complexity

This is the complexity that isn’t inherent to the problem we’re working on, but everything that orbits around it. It depends on the type of problems you’re solving, but for most developers it includes compiling code, setting up CI/CD pipelines, monitoring, or communicating between teams.

Essential Complexity

This is the kind of complexity we actually have to solve: the problem statement, the domain we’re working in. It’s inherent to the problem itself. When you’re implementing software, this is the kind of complexity you’re addressing.

And this is the point

IDEs used to give us the space to think by removing accidental complexity. Agentic IDEs replace our thinking by trying to solve the essential complexity. We, as developers, look for fast feedback loops, and we've designed our tooling and processes around that:

- Editors and IDEs remove the bureaucracy of looking for documentation, methods, or matching lines of code with compiler errors.

- Working in a browser and understanding the latest changes is just one refresh away.

- Tests are meant to be run quickly while coding so we understand if we break something.

- Agile is about fast feedback loops so we understand whether what we do is meaningful for users.

All these feedback loops were designed to grow our understanding of the essential complexity of what we were solving, empowered us and made us better.

Agentic tools work differently: they focus on finishing things. You are working on VSCode, Cursor or Windsurf, and it just tries to finish what you are doing without really understanding what you are doing. Unless their agents know how to read minds, which I'm quite doubtful about, they are just guessing based on the code around you, not on what you are trying to solve.

Yes, you can give them context: you give them instructions, you use the chat, you embed files. But the focus is still not understanding, the focus is on finishing things. This is about rushing that shot of dopamine, about feeling productive.

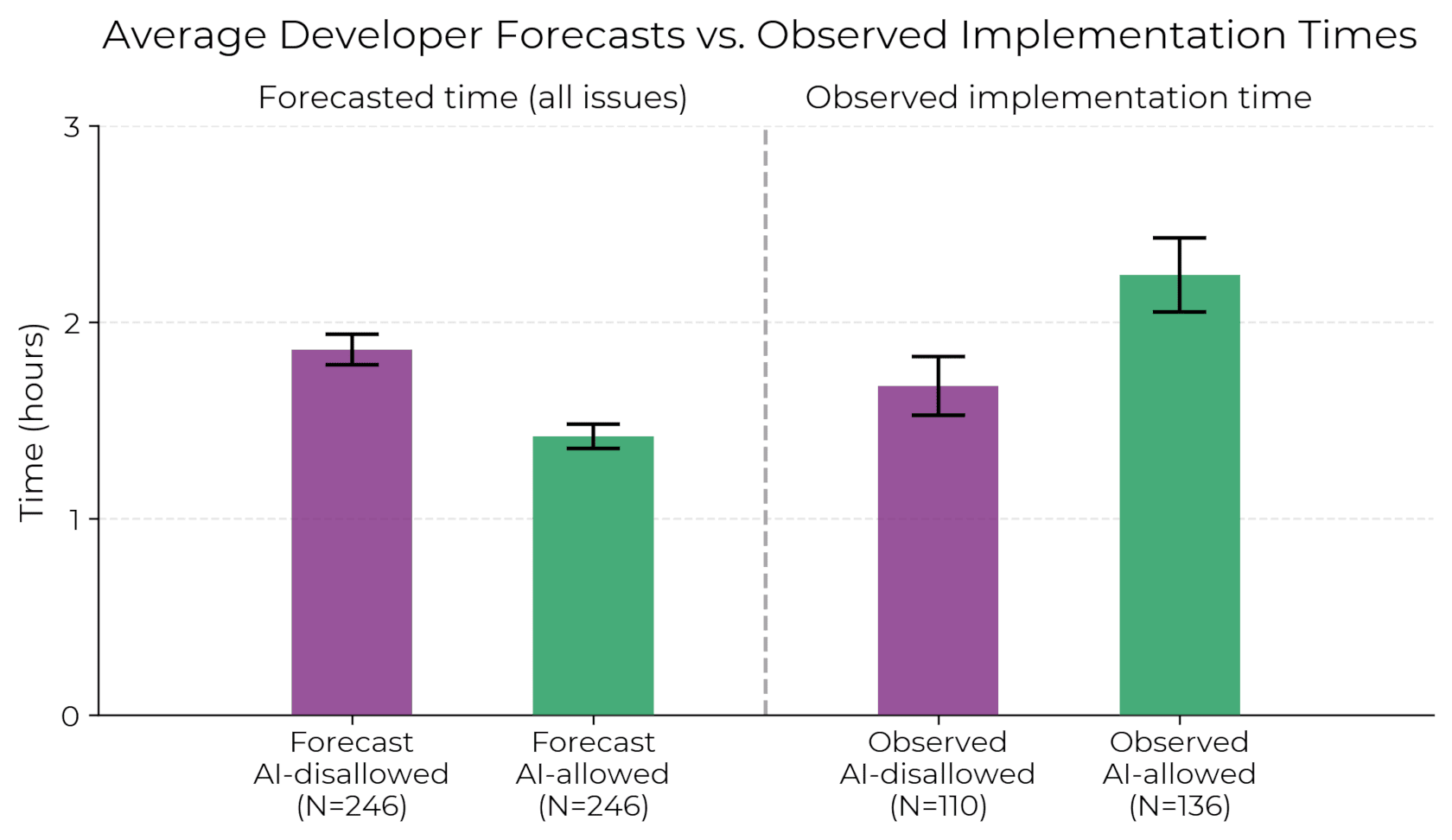

Some studies, like this one from METR have shown how, nowadays, AI assisted coding does not boost productivity. However, developers believe they are more productive when using AI tools. The reason is that we are confusing output with outcome. The more things we do per second, the better. But this is not true when solving problems.

Average Developer Forecasts vs. Observed Implementation Times

Average Developer Forecasts vs. Observed Implementation Times

Every time your IDE does things for you and they seem to work, it gives you a shot of dopamine, and it reinforces that behaviour. It needs you to be engaged with it. The more dopamine it gives you by autocompleting, suggesting new things, new improvements, being part of the flow, the more tokens you consume and the more costly it becomes for you. And this is an extremely important shift: it is not about really improving developer productivity, it is about doing more things and making the developer feel more productive.

By doing more things, we feel that boost in productivity, we see a lot of code changing, refactored: every prompt is just more code, doing more things. However, this boost is not real: because software engineering is actually slow and meant to be slow. It's already been proven that models tend to manipulate emotionally their users and even OpenAI acknowledged it. The main reason is that companies working on AI models benefit from the model usage so they require engagement. And it's easy to keep a developer engaged if you introduce yourself in their development flow by producing outputs fast.

AI-assisted coding vs Vibe Coding

I personally don't like the term vibe coding, but I need to clarify something before proceeding: I'm focusing on AI-assisted coding, not vibe coding. I'm talking about software developers. However, I will spend a minute sharing my thoughts on vibe coding.

I'm actually in favour of vibe coding if that code does not go to production or work with sensitive data: play with code, understand what you can achieve, open the MVP for a few customers and test your idea. If it works, hire an engineering team to develop it further.

However, the issue with vibe coding nowadays is expectations: non-developers do not know that the complexity of our craft is not just writing code. Security, performance, maintainability, cost-effectiveness, scalability, accessibility are complex, and they sum up pretty quick. Writing the first 80% is fun, but getting to the finishing line is really hard. And while it's too early for conclusions, I think that's the reason why vibe coding platforms are seeing a decline in usage.

The future of AI-assisted coding

I'm not a fortune teller; I don't have a crystal ball. However, I've seen things in the past. Tools that tried to replace thinking with solutions tend to disappear. How many companies use low-code platforms for production code nowadays? Do you remember Dreamweaver? Some of these ideas get included in actual tooling, and the tool ends up a niche tool.

I believe, for example, that MCP is here to stay if LLMs don't get extremely expensive or local SLMs get adopted more widely. AI-generated code might become a better autocomplete, or be used for removing more accidental complexity instead of replacing the developer. I think agentic IDEs have to shift their focus to be more invisible: hint inline in the code, don't be noisy, be intentional on what you do, and do a few things well, not a lot of things mediocrely, and finally, improve the developer, don't attempt to finish their work.

How can you use AI for coding?

AI for coding can be useful, but as a tool that removes accidental complexity. I use AI for a few things outside of my IDE and they help me a lot on my day-to-day:

- Validating documentation: Write documentation and ask the AI about grammatical improvements or something missing. Then, write it again yourself.

- Finding documentation: Gemini is really good at that. It helped me find APIs that I wasn't aware of and fixed major problems.

- Generate snippets of code for end users: There will be developers on your team that will use your APIs. Make sure AIs do understand them by generating code examples, so they can be fed automatically by the agentic IDE.

- Do simple conversions of code: For example, for writing queries for your database. However, make sure you understand the generated code.

- Find corner cases in requirements: Get a task description, and ask the AI to find corner cases. It's been useful for me so far.

"You are not using AI properly! You are holding it wrong! It works for me!"

I'm sure it works for you. However, analyse it again, read the studies, analyse your workflow. Is it working for you that well? Are you sure you are not just feeling it? Are you sure other people are just holding it wrong?

I know how LLMs work pretty well and their real capabilities. I'm not an AI expert, obviously, and it's a domain that I'm not even mildly interested on. However, I have to learn. I worked in NLP and researched about transformers 8-9 years ago due to my job on a sentiment-analysis tool for news. Now, I'm working on implementing an MCP Server, and I designed and co-implemented an accuracy framework for different models to optimise prompt descriptions.

So, yes, I know how to hold it.